Traditionally, AI models are only rewarded when they get the correct answer. “They are scored the same as students taking multiple-choice tests,” said Pentao Xie, lead author of the study and professor in the Department of Electrical and Computer Engineering at UC San Diego Jacobs School of Engineering. “If you choose the correct answer, even if you guess, you get full credit.” In contrast, his team’s approach evaluates the model to show how it behaves. “You’ll be rewarded for thinking logically step by step, rather than just guessing correctly,” Xie says. “If you get the right answer using the wrong logic, you don’t get the reward.”

This is a shift from asking “Did I understand AI correctly?” “Has AI been thought through?” He added that it could provide a necessary safety net for high-stakes applications such as medical diagnostics, financial analysis, and engineering.

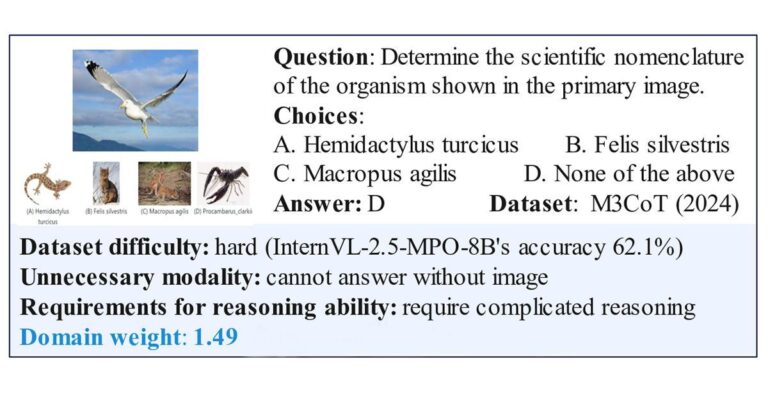

The next challenge was to make this style of training work for AI models that needed to reason using both language and images. So far, it has primarily worked well with text-only AI models. That’s because the quality of the training datasets varies widely. Some contain rich, high-quality information; others contain noise, oversimplified information, or irrelevant information. Feeding all this data evenly to an AI model can slow down training and reduce performance. “It’s like trying to learn calculus when half your reading list consists of kindergarten coloring books,” Xie explained.

To address this challenge, Xie’s team designed a system that acts as a smart curator of training data. Rather than treating all datasets as having equal value, learn to assign different levels of importance to each. Low-quality data is downplayed and the focus is on challenging, high-quality samples. The system also evaluates itself on another set of problems and uses that feedback to adjust how it prioritizes training data.

“Our system doesn’t just learn from everything,” Xie says. “Learn what’s worth learning. Quality over quantity.”

When tested across multiple benchmarks in visual and mathematical reasoning, the team’s system consistently outperformed other techniques. AI models trained on this system achieved the highest published score of 85.2% on MathVista. MathVista is a widely used visual mathematical reasoning test that incorporates word problems that include graphs and diagrams. Results were verified by MathVista organizers.

Xie added that this approach will democratize AI by allowing smaller models that can run on personal computers to match or outperform larger proprietary models such as Gemini and GPT on difficult mathematical benchmarks. “You don’t need a trillion-dollar computing cluster to get cutting-edge inference,” he says.

The team is currently refining the system by evaluating the quality of individual questions rather than the entire dataset. It also speeds up the training process and reduces computational load.

Full study: “DreamPRM: A domain reweighting process reward model for multimodal inference.” Study authors include Qi Cao, Ruiyi Wang, Ruiyi Zhang, and Sai Ashish Somayajula, all of the University of California, San Diego.

This research was supported by the National Science Foundation (IIS2405974 and IIS2339216) and the National Institutes of Health (R35GM157217).

For more information about research and education at the University of California, San Diego, visit Artificial Intelligence.