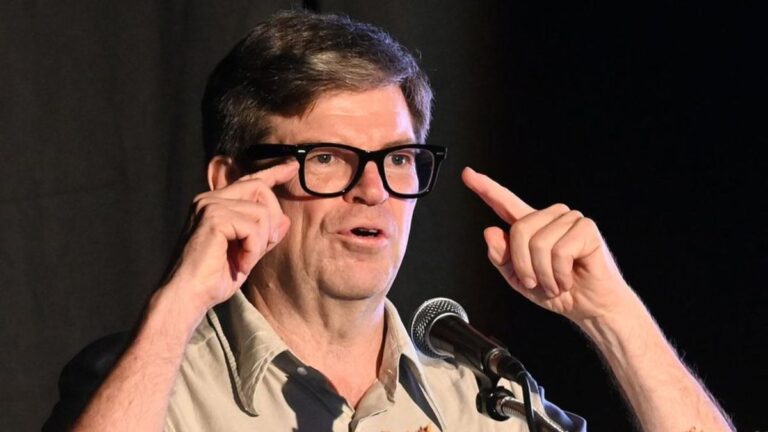

Speaking at a session at the World Economic Forum in Davos, Yan Le Kang, chief AI scientist at Meta, predicted “a new paradigm shift in AI architecture.” He says that AI as we know it today – generative AI and large-scale language models (LLMs) – can’t do much. We’ve done the basics, but it’s still not enough. And in the next five years, “no sane person will be using them anymore,” he said. “I think the current (AI) paradigm has a fairly short lifespan, probably three to five years,” LeCun added.

“A new paradigm of AI architecture will emerge that does not have the limitations of current AI systems,” he predicts.

Meta’s lead AI scientist also believes that in the coming years, we could enter a “decade of robotics” that will see applications of entirely new technologies that combine robots and AI.

LeCun also explains why he thinks current AI models don’t do much. He gives four reasons for this. For one, current models lack awareness and understanding of the physical world, he says. Second, there is a limit to how much we can remember at one time, and we do not have a continuous memory. Third, they lack reasoning ability. And fourth, they are unable to perform complex planning tasks.

“So we’re going to see a further revolution in AI over the next few years. Maybe it’s not generative in the sense that we understand it today, so it might have to be renamed,” LeCun said. say.

LeCun points out that the “AI revolution” may be a decade away, but given current advances in AI, big changes may be on the horizon.

“LLMs are good at manipulating language, but not thinking about it,” LeCun says.

During his “technology discussion” at Davos, LeCun may have also revealed what Meta’s AI lab is currently working on.

“So what we’re working on is getting the system to build a mental model of the world. If the plan we’re working on is successful in the timeline that we’re hoping for, within three to five years. “A system with a completely different paradigm will be completed,” he said. “They may have some common sense. They may be able to learn how the world works by observing the world and perhaps interacting with it.”

Interestingly, while Meta AI’s lead scientist talked about the inability of current AI and LLM models to perform complex tasks, on Friday OpenAI and Perplexity excel at performing complex multi-step tasks. announced a new agent AI that claims to be For example, OpenAI’s AI agent called Operator – currently only available in the US – can order groceries when you give it a shopping list or book a flight when you share your itinerary. can. Along with that. You can also create memes. Essentially, anything you do on the web can be done for you by an agent AI.