Insider Brief

Semi-Stealty Startup Tuthopic has released a paper detailing its technical approach. According to the paper, the company is building the technology supported by the development of parameterized stochastic analog circuits. The company’s “accelerators” promise significant improvements in both the algorithm runtime and energy efficiency.

For light paper, it’s some intense reading.

That being said, the Caesarean team, a somewhat stealth startup that recently announced its $14.1 million seed round, has released a paper to better flesh out what the company is doing.

According to this paper, Putropic is a construction technology supported by the development of parameterized stochastic analog circuits. Another way to put this is like highly tunable electronic circuits where these circuits can handle a wide range of tasks by mimicking the randomness that is found in nature, with uncertainty and prediction It is particularly useful for complex computing tasks.

When scalable, this represents a significant deviation from traditional digital computing

The extraordinary “accelerator” promises significant improvements in both the runtime and energy efficiency of algorithms that require sampling from complex energy landscapes. Inspired by the principles of brown motion, this new class of accelerators harness programmable randomness and place their appearance at the forefront of generative AI innovation.

Company revelation has real timeliness. The team writes that the tech industry is currently tackling the insatiable demand for computing power, spurring the rapid advancement of artificial intelligence (AI).

The need for electricity is against the physical limitations of current technology. Historically, the march of computing efficiency has been predicted by Moore’s law, and largely thanks to the miniaturization of CMOS transistor technology, and the demand for computer technology is largely due to the miniaturization of CMOS transistor technology. We have responded to the rise. However, this paper approaches the physical limits of this technology, and the transistors approach atomic size, which creates thermal noise that challenges digital manipulation. The team calls this the “Moore’s Wall.”

This shadow is upon us at a surge in energy requirements for AI, leading to the proposal of extreme solutions such as nuclear-powered data centers. The replacement reports that exploring sustainability in scaling computing power and AI requires an unprecedented infrastructure and engineering overhaul.

Dextopic’s paper offers a glimpse into alternatives inspired by the efficiency of biological systems. In nature, computing is neither rigid nor exclusive. Rather, it thrives inherent randomness and discrete interactions within cytochemical reaction networks, the paper states. This biological efficiency suggests a potential pathway beyond the limitations of traditional digital logic.

The core of Extropic’s approach is the Energy-Based Model (EBM). This is set at the intersection of thermodynamics and stochastic machine learning. These models, particularly exponential families, present ways to efficiently parameterize probability distributions using minimal data. This is important for modeling rare but impactful events, allowing for high degree of entropy and accurate reflection of target distributions.

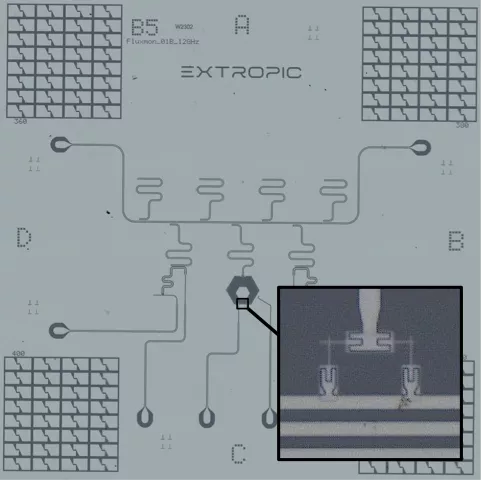

Additionally, Exopic’s light paper delves into the technical capabilities of superconducting chips, designed to operate at low temperatures and take advantage of the Josephson effect to access non-Gaussian probability distributions. This advancement not only highlights the energy efficiency of these chips, but also emphasizes the appearance commitment to expanding the scope of the technology through semiconductor devices suitable for room temperature operation.

According to the paper, “The superconducting tips of exoplants are completely passive. That is, they consume energy only when measuring or manipulating their state. This means that these neurons are the most energy efficient in the universe. These systems can be very energy efficient at large scale. They can be used to target low-capacity, high-value customers, such as governments, banks, and private clouds. I’ll do it.”

Beyond Hardware, Destopic creates software layers that bridge abstract EBM specifications using practical hardware controls. This initiative aims to transcend memory limitations inherent in deep learning and to tell a new era of AI acceleration that can redefine the boundaries of artificial intelligence.

In fact, this paper offers several advantages that technology is poised to offer:

By extending hardware scaling far beyond the constraints of digital computing, AI accelerators that are much faster and more energy efficient than digital processors (CPUS/GPUS/TPUS/FPGAS) cannot be run on digital processors. It can unlock powerful stochastic AI algorithms possible.

It was founded by Guillaume Verdon and Trevor McCourt. In December, Destopic announced it had closed its Series Seed Funding, raising a total of $14.1 million. The round was led by Steve Jang of Kindred Ventures, Buckley Ventures, HoF Capital, Julian Capital, Marque VC, OSS Capital, Valor Equity Partners, Weeken We saw participation from venture capital companies such as d Fund.

If you find this article useful, you can explore more current Quantum News here.