The world’s fastest manufacturer of AI chips will splash with Deepseek OnboardingCerebras. The solution ranks 57 times faster than GPUs, but it doesn’t mention which gpusdeepseek R1 will run in the Celebras cloud and the data will remain in the US.

Cerebras has announced that it will support DeepSeek with such an incredible move, more specifically the R1 70B inference model. The move comes after Groq and Microsoft confirmed that they would bring new children in AI blocks to their respective clouds. Although AWS and Google Cloud have not done so yet, anyone can run open source models locally and anywhere.

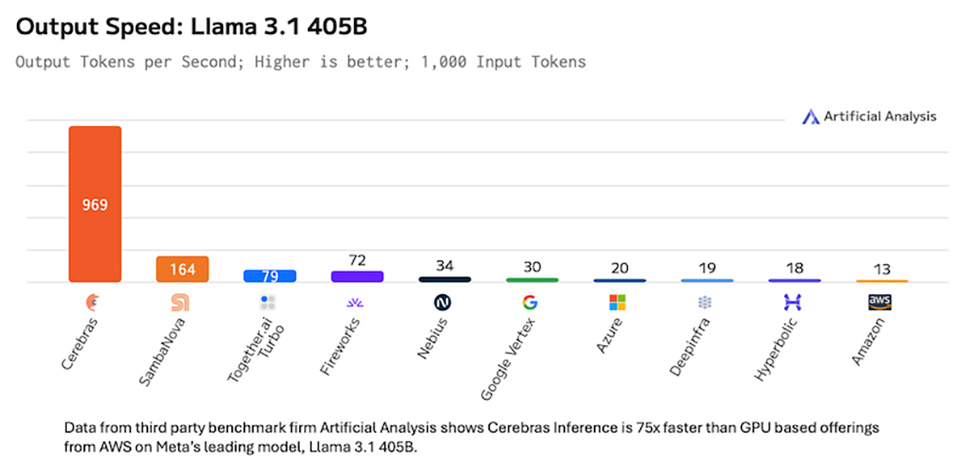

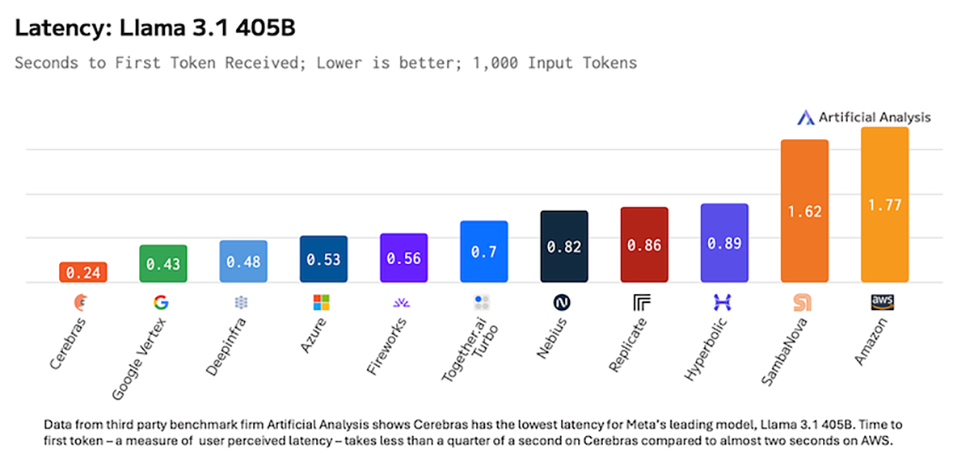

AI Inference Chip Specialist runs DeepSeek R1 70B at 1,600 tokens/sec. 28 tokens/sec can be deduced to be something that GPU-in-the-Cloud solution (in that case DeepInfra) has clearly reached. Coincidentally, Celebras’ latest chip is 57 times larger than the H100. I contacted Celebras to learn more about the claim.

A study by Cerebras also demonstrated that Deepseek is more accurate than the Openai model in many tests. This model runs on Celebras hardware in US-based data centers to alleviate privacy concerns expressed by many experts. DeepSeek – App – sends data (and metadata) to China. Here, you won’t be surprised as almost every app, especially the free app, captures user data for good reason.

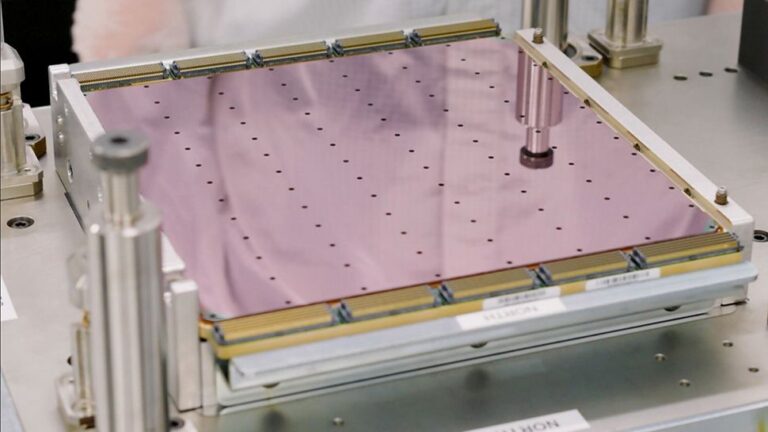

Celebra Swafer Scale Solutions will be deployed independently to benefit from the impending AI cloud inference boom. The world’s fastest AI chip (or HPC accelerator), the WSE-3 has almost a million cores and an incredible $4 trillion transistors. More importantly, it has 44GB of SRAM. This is faster and faster than the HBM found on NVIDIA’s GPUs and is the fastest memory available. Because the WSE-3 is one huge die, the available bands of memory are huge, several orders of magnitude larger than what the Nvidia H100 (and H200) can convene.

A price war has been brewed ahead of the launch of WSE-4

Although the pricing has not been disclosed yet, Celebras is usually a heartfelt Celebras for that particular detail, and last year, in Celebras inference, the Llama 3.1 405b has a $6 million input token and $12 million/mn. It has been revealed that the output token will be expensive. Expect to see much less Deepseek available.

WSE-4 is the next iteration of WSE-3, significantly improving the performance of DeepSeek and similar inference models scheduled to be released in 2026 or 2027 (depending on market conditions).

The arrival of Deepseek will rock the trees of Provevial Ai Money, bringing even more competition to established players like Openai and humanity, pushing prices down.

docsbot.ai A quick look at the LLM API calculator, Openai is mostly the most expensive in all configurations, sometimes a few digits of magnitude.