Bytedance, the parent company of Tiktok, has dropped a family of a collaborative image and video generation model called Goku. The model appears to have been named after the popular anime character Goku from the Dragon Ball series.

This comes right after the company mocked a video AI model that generates videos from images called Omnihuman-1.

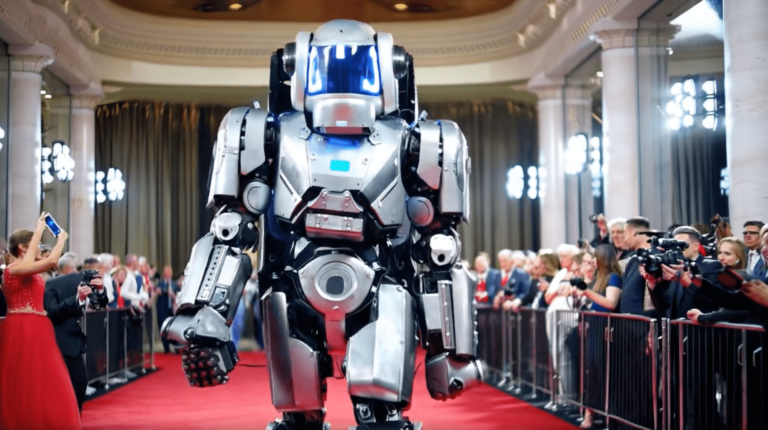

Researchers argue that the Goku model can help create product videos featuring AI-generated influencers, marketing avatars, landscape demonstrations, Chinese poetry visualizations, portrait video demos and more .

This research paper attributes the ability of the model to generate high-quality videos into several important factors. One is the implementation of Modification Flow (RF) formulations for joint image and video generation, and the compression of employment of 3D joint image video VAEs into a shared latent space.

Additionally, the architecture features a fully-focused transnetwork, enhanced with techniques such as flashat, sequence parallelism, patch N-pack, 3D rope position embedding, and QK normalization.

This paper also demonstrates that the Goku model demonstrates superior performance in both qualitative and quantitative evaluations, setting new benchmarks compared to competitors such as Luma, Open-Sora, Mira, Pika, etc. It’s there.

Goku achieved 0.76 in Geneval, 83.65 in DPG bench for image generation from text, and 84.85 in VBench for intertext tasks. You can see the benchmark results below.

“We believe this work will provide valuable insights and practical advancements to the research community in developing collaborative image and video generation models,” the researchers said.

The ability of a model to generate high-quality product videos with AI-generated influencers and other realistic visuals can have significant benefits for content creators, influencers, marketers, and more.