Are you worried about your personal data being sent to China while visiting Deekseek R1, the leading leading language model (LLM) that has swept the internet? Well, there are ways to mitigate privacy-related issues while using it, and other LLMSs use them by running them locally on the device.

There are many tools for Windows PCs, but sometimes you can run open source AI models like Deepseek and Llama 3 natively, but LM Studio is one such free tool, and what we think So we found it to be one of the best, available. For PC, Mac and Linux. Although any device can run small models with fewer parameters, it is recommended to run these models on a PC with a fairly powerful CPU, GPU, and at least 16 GB of RAM.

LM Studio allows you to run state-of-the-art language models such as Llama 3.2, Mistral, Phi, Gemma, Deepseek, and Qwen 2.5 locally on your PC. It is recommended to run small models with less than 10 billion parameters, commonly known as distillation models.

These models are large versions of faster, more efficient versions, compressed using a process called distillation, condensing all knowledge into smaller packages. These models are optimized to ensure better results.

Depending on the AI model you choose, your PC may need around 10 GB of data and storage space, as resources that run LLM must be downloaded to your computer. Once everything is downloaded, you can access the AI model even when offline.

How to run Deepseek natively on a PC

LLM Studio is also available on Macs. (Express photo)

LLM Studio is also available on Macs. (Express photo)

Download and install LM Studio from lmstudio.ai.

Once installed, this tool will be asked to download and install the distilled (7 billion parameters) DeepSeek R1 model.

The story continues under this ad

You can also download and use other open source AI models directly from LM Studio.

If you are new to using AI models on a PC via LM Studio, you may need to manually load the model. Depending on the size of your model, it can take seconds to minutes to load completely.

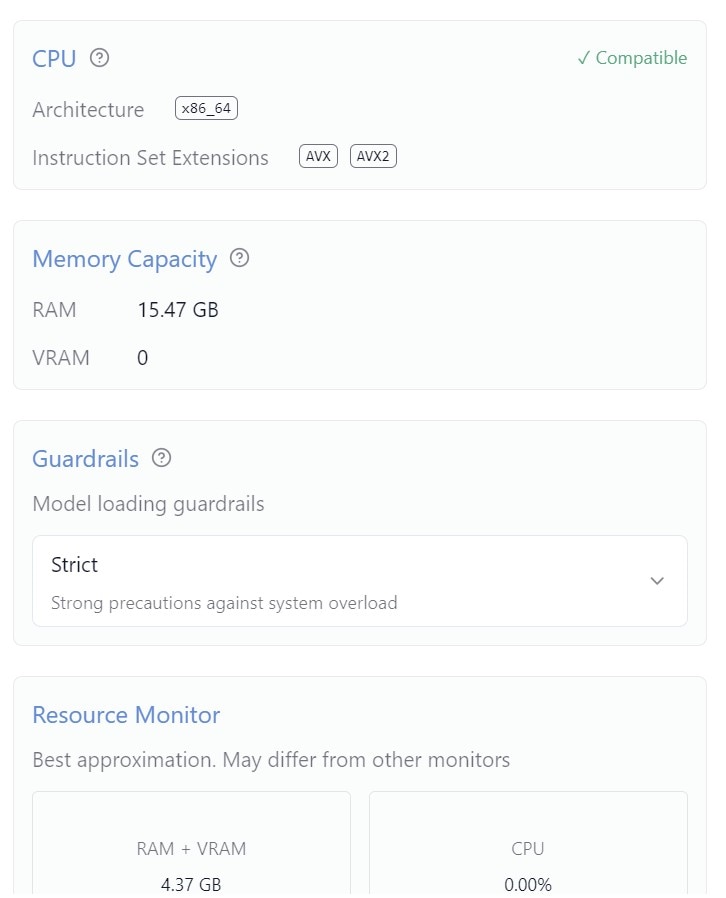

Important Things to Keep in mind while Running an AI Model

Make sure your device has enough computing power and RAM. (Express photo)

Make sure your device has enough computing power and RAM. (Express photo)

Depending on the computing capabilities of your PC, responses from locally running AI models can take time. Additionally, be aware of the use of system resources in the lower right corner. If your model is consuming too much RAM and CPU, it’s best to switch to an online model.

Additionally, you can run your AI models in three modes that provide minimal customization support. user. Power users who provide some customization features. Developer mode to enable additional customization features. If you have a laptop with an NVIDIA GPU, performance may improve from the AI model.

The story continues under this ad

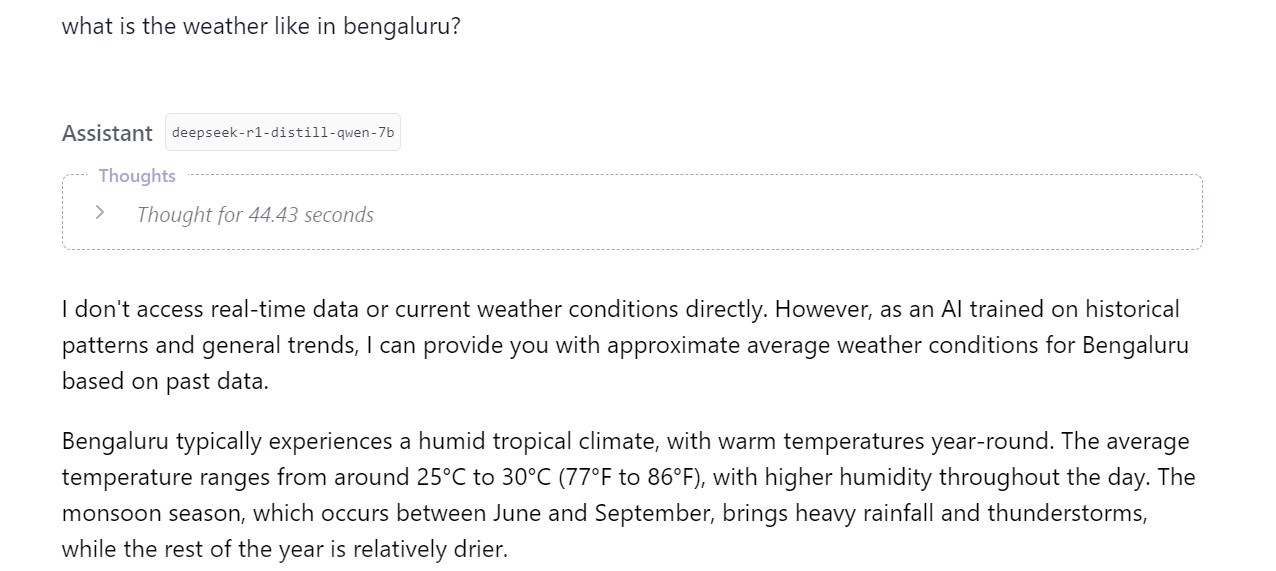

How to know if the model is actually running locally

A distilled version of Deepseek R1 running on a PC. (Express photo)

A distilled version of Deepseek R1 running on a PC. (Express photo)

The best way to check is to turn off Wi-Fi and disconnect the Ethernet on your PC. If the model continues to respond to queries even when offline, it is to indicate that it is running locally on your PC.

My observation

Even simple responses can take much longer while running the model locally. (Express photo)

Even simple responses can take much longer while running the model locally. (Express photo)

I ran the DeepSeek-R1-Distill-Qwen-7B-Gguf in a thin, bright notebook with an Intel Core Ultra 7 256V chip and 16GB of RAM. In my usage, I noticed that the model is pretty fast to respond to some queries, but the other response took about 30 seconds. During active use, the RAM usage was around 5 GB and the CPU usage was around 35%.

This does not affect the actual quality of the response, but depending on the query, you may need to wait longer for the AI model to generate the response.

For those worried about data privacy, running your model locally is definitely a great solution. Especially for those who don’t want to miss out on the latest innovations.