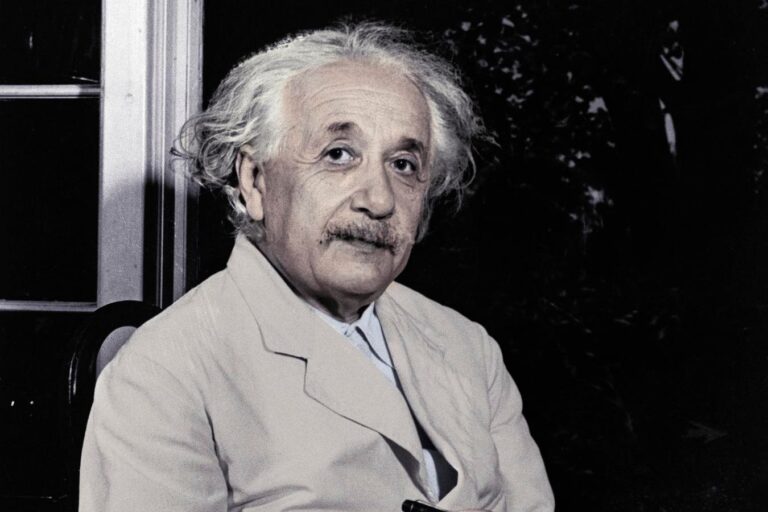

Dr. Albert Einstein is shown to be sitting near the window.

Bettmann Archive

It seems inevitable that this kind of service will be displayed in the era of lively artificial intelligence and voice cloning. Character.ai is a platform that users can exchange avatars based on fictitious personas, or, in some cases, in some cases, live people. Imagine Einstein, JFK, or Cartco Barn, or sadly shuffle this human coil, ask questions, and talk to the person who has obtained the trademark of that person. Or, in an era where loneliness is fashionable, build and talk about your own properly.

For a while, this technology seemed to be dominant, despite some of the attached risks. In a sense, this is a large and open playground, but there is a possibility that a problem will occur without the right guardrail.

Let’s take a look at some of the company’s trajectory as a case study on what people are doing in new powerful applications in LLM to our world.

Fun characters and passion for AI

The Charition.ai creator of NOAM SHAZEER was a long -term Google employee, leaving the company, creating a startup, and returned to Google Fold. Specifically, Wikipedia reports that Shazeer has left Google in 2021 and built Character.aii and returned to Google in 2024 to support Gemini. It makes sense that Google wants someone with this kind of experience for the technical lead of Gemini, a consumer product.

You can see his interview with A16Z SARAH WANG for his prospects about AI. So she asks him and deals with his chatbot avatar the same question.

Throughout, you can see that Shazeer himself is very enthusiastic about the power of a large language model. He pointed out that he had no scaling restrictions, pointing out the strong victory of model architecture, distributed algorithm, and quantification.

According to Shazeer, AI brought us a “many valuable applications” and when he talked about creating Character.ai, he had a vibrant positive sensation.

Shazeer’s AI characters seemed to be accepting potential opportunities in new AI applications, but at the end of the interview, when the agents were limited to text communication, when asked about the possibility of AI. I had a strange admission towards me.

Shazeer’s digital twin, a chatbot character, quoted the “great confusion between society and personal happiness” in its prediction.

It seems to be talking about itself, in a sense, and the predecessor of where the company is now.

Combat responsibility

Just a few days ago, the court examined a claim to reject a case in which Chargeter.ai has defended himself for suspicion of violation of safety.

Plaintiff Megan Garcia claims that her son will eventually commit suicide after developing extreme emotional attachment to the AI character on the platform.

This and other issues have raised great questions about the bold, new formal communication intersection and the possibility of harm, which impair the reputation of the platform. The problem of the case is the idea of freedom of speech, scrutiny of community sensible sections 230, and the need for users to access technology at their own risk.

“In the rejected claim, the Character AI lawyer claims that the platform is protected by the Correction, as in computer code,” says TechCrunch’s Kyle Wiggers. “This claim may not persuade the judge, and as the case progresses, the legal legitimacy of the AI’s personality may change, but this movement suggests the initial elements of character AI defense. It may be.

Therefore, the court has a trajectory, but there is also a social sensitivity that protects from the unintended side effects of having these abilities to create new people with new people in LLMS.

How to use and modern

How do I protect users from myself? How do you do not get lost in the territory that causes human harm?

I met this from Raspberry Pi Foundation, but posted last week.

“As our lives are increasingly intertwined with AI -equipped tools and systems, it is more important to provide young people with the skills and knowledge necessary to be involved in AI with AI with a safe and responsibility. Mac Bowley writes. “AI literacy is not only about understanding technology, which is to promote important conversations on how to minimize potential harm while integrating AI tools into our lives.

Therefore, “AI safety” should be a common part of our lives, not the concerns of engineers and developers.

We must consider these questions and potential solutions to investigate the limits of what LLMS can do. Focusing on ethical AI and focusing on appropriate support for young people, it may be able to create an environment that supports individuals and solve climate problems that threaten the whole world.