Until a few weeks ago, few people in the Western world had heard of a small Chinese artificial intelligence (AI) company known as Deepseek. However, it attracted global attention on January 20th when it released a new AI model called the R1.

R1 is the “inference” model. This means that the tasks are run step by step and explain the work process to the user in detail. This is a more advanced version of the Deepseek V3 model released in December. Deepseek’s new product is almost as powerful as rival Openai’s most advanced AI model, O1, but at a small cost.

Within days, Deepseek’s app surpassed ChatGpt with new downloads, curtailing the stock price of US tech companies. It also came to claim that Chinese rivals had effectively stole some of the crown jewels from the open model and built their own.

In a statement to the New York Times, the company said:

We are reviewing it with the recognition that Deepseek may be inappropriately distilling the model and may be sharing information as if we know the details. We will take proactive and proactive measures to protect our technology and continue to work closely with the US government to protect the most capable models built here.

The conversation approached Deepseek for comments but did not respond.

However, if Deepseek copied it or “distilled” in scientific terms, or even if there is at least a portion of ChatGpt to build R1, Openai will underestimate intellectual property while developing the model It’s worth remembering that they’re being accused of doing so.

What is distillation?

Model distillation is a common machine learning technique in which smaller “student models” are trained to predict larger and more complex “teacher models.”

Once completed, students may be roughly as good as teachers, but they will express their teachers’ knowledge in a more effective and compact way.

To do this, there is no need to access the internal mechanisms of the teacher. What we need to pull off this trick is asking the teacher model enough questions to train students.

This is what Openai claims Deepseek did. We queried Openai’s O1 on a large scale and trained Deepseek’s own more efficient model using the observed output.

Some of the resources

Deepseek argues that both training and use of R1 requires only a small fraction of the resources needed to develop the best models of its competitors.

There is reason to be skeptical of some of the company’s marketing hype. For example, a new independent report suggests that hardware spending for R1 reached US$500 million. But even so, Deepseek was built very quickly and efficiently compared to its rival models.

This may be because Deepseek distilled Openai’s output. However, there is currently no way to prove this definitively. One way to be in the early stages of development is to watermark AI output. This adds invisible patterns to the output, similar to those applied to copyrighted images. There are different ways to do this in theory, but none are as effective or efficient as practiced.

There are other reasons that can help explain Deepseek’s success, such as the company’s deep and challenging technical work.

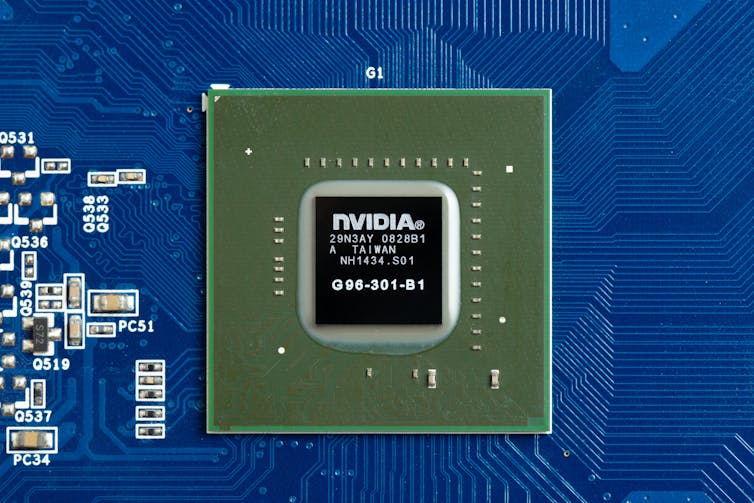

Technological advances DeepSeek have made include utilizing more powerful but inexpensive AI chips (also known as graphical processing units, or GPUs).

Deepseek had no choice but to adapt after the US banned exporting its most powerful AI chips to China.

Western AI companies can buy these powerful units, but the export ban forced Chinese companies to innovate to make the most of cheaper alternatives.

A series of lawsuits

Openai’s Terms of Use explicitly state that no one can develop competing products using the AI model. However, our own models are trained on large datasets that have been scraped off the web. These datasets contained a significant amount of copyright. This has the right to use it based on “fair use,” Openai said:

Training AI models using publicly available Internet materials is used fairly, as supported by years of widely accepted precedents. We consider this principle to be fair to creators, necessary for innovators, and important to our competitiveness.

This argument will be tested in court. Newspapers, musicians, authors and other creatives have filed a series of lawsuits against Open Eye on citing copyright infringement.

Of course, this is completely different to what Openai blames Deepseek for doing. Nevertheless, Openai doesn’t really sympathize with the claim that Deepseek illegally harvested the output of the model.

The war of words and litigation is artificial in how the rapid advances in AI outweigh the development of clear legal rules in the industry. And while these recent events may reduce the power of AI incumbents, they rely heavily on the consequences of various ongoing legal disputes.

Swaying global conversation

Deepseek shows that cutting-edge models can be developed cheaply and efficiently. It has not yet been seen whether they can compete with Openai on equal arenas.

Over the weekend, Openai tried to demonstrate its advantage by revealing its most advanced consumer model, the O3-Mini.

Openai claims the model is also significantly better in its previous market-leading version, the O1, and is “the most cost-effective model in the Inference series.”

These developments mark an era of increasing choices for consumers and provide the market with the diversity of AI models. This is good news for users. Competitive pressure makes the model cheaper.

And the benefits expand even further.

Training and using these models puts a huge burden on global energy consumption. As these models become more ubiquitous, we all benefit from their improved efficiency.

The rise of Deepseek certainly marks new areas for building models cheaper and more efficient. It will probably shake up the global conversation about how AI companies should collect and use training data.![]()

(Author: University of Melbourne, University of Melbourne, Shaanan Cohney, Lecturer in Cybersecurity and Senior Lecturer at the University of Melbourne)

This article will be republished from the conversation under a Creative Commons license. Please read the original article.

(Except for the headlines, this story is not edited by the NDTV staff and is published from Shinjikate Feed.)