Tiktok’s $300 billion parent companybytedance is one of the busiest AI developers in the world. I plan to spend billions of dollars on AI chips this year. That technique runs for money to Sam Altman’s Openai.

Bytedance’s Duobao AI Chatbot is currently the most popular AI assistant in ChinaAs of January, there are 78.6 million active users.

this It has become the world’s second most used AI app behind Openai’s ChatGpt (349.4 million Mauss). The recently released Doubao-1.5-Pro is claimed to match Openai’s GPT-4o performance at just a fraction of the cost.

Counterpoint research in Duobao’s positioning and capabilities “Like international rival ChatGPT, the foundation of Doubao’s appeal is multimodality, offering advanced text, image and audio processing capabilities. ”.

You can also generate music.

In September, Bytedance added the AI Music Generation feature to its Duobao app. This “it seems like it supports more than 10 different musical styles, and allows you to write lyrics and compose music in just one click.”

However, this is not the end of Baite Dance’s appeal to building music AI technology.

On September 18th, Bytedance’s Duobao team announced a massive launch of a suite of AI music models called Seed-Music.

They argued that seed music “gives people more opportunities in creating music.”

Founded in 2023, the Bytedance Doubao (Seed) team is “dedicated to building an industry-leading AI Foundation model.”

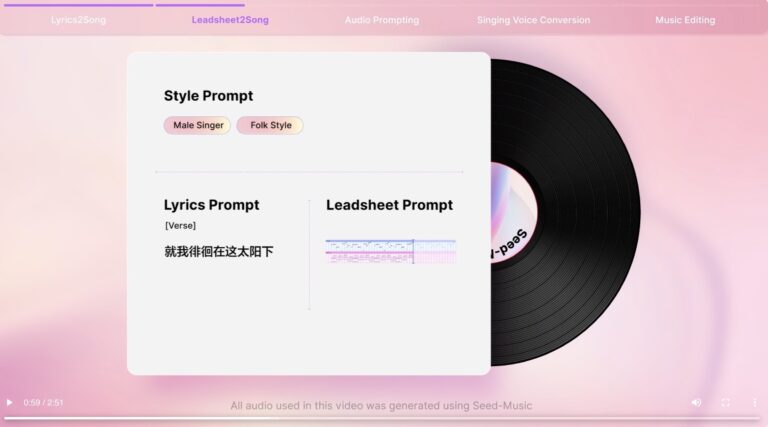

According to the official Seed-Music release announcement in September, AI Music Product “supports score-to-song conversion, controllable generation, music and lyrics editing, and low-granting audio cloning.”

It also claims that “it cleverly combines the strengths of language and diffusion models and integrates them into the music composition workflow, making them suitable for a variety of music creation scenarios, both beginners and experts.”

The official seed music website contains many audio clips that show what you can do.

You can hear some of them below:

But what’s more important is how seed music was constructed.

Fortunately, the Duobao team has released a technical report explaining the internal mechanisms of the Seed Music Project.

MBW read the cover.

In an introduction to the Bytedance research paper, which can be read in full here, the company researchers stated:USIC is deeply embedded in human culture” and “Through human history, vocal music has been accompanied by important moments in life and society. From the call of love to the harvest of the seasons.”

“Our goal is to leverage modern generative modeling technology, not replacing human creativity, but lowering barriers to music creation.”

Bidance research paper for seed music

The intro continues as follows: “Today, vocal music remains at the heart of global culture. However, creating vocal music is a complex, multi-stage process that involves pre-production, writing, recording, editing, mixing and mastering, and is a challenge for most people. It’s target.”

“Our goal is to leverage modern generative modeling technology to lower barriers to music creation rather than replacing human creativity. By providing interactive creation and editing tools, we can help you with beginners and We aim to enable both experts to participate in different stages of the music production process.”

How seed music works

Researchers at Bytedance explained that the “unified framework” behind seed music is “built on three basic representations: audio tokens, symbolic tokens, and vocoder latency.” It’s there.

As shown in the chart below, an audio token-based pipeline works as follows: “(1) Input embedding converts multimodal control inputs to prefix embeddings that connect multimodal control inputs such as music style descriptions, lyrics, reference audio, music scores, etc. (2) Auto-regression LM , generates a series of audio tokens. (3) The diffusion transformer model generates continuous vocoder latents. (4) The acoustic vocoder generates high quality 44.1kHz stereo audio.

In contrast to the audio token-based pipeline, the symbolic token-based generators, which can be seen in the chart below, states that “it is designed to predict symbolic tokens for better interpretability.” Masu. With seed music.”

According to the research paper, “symbolic expressions such as MIDI, ABC notation, and MusicXML are individual and can be easily tokenized into LMS-compatible formats.”

Researchers at Bytedance said, “Unlike audio tokens, symbolic expressions are interpretable and allow creators to read and modify them directly. However, due to lack of acoustic details, the system is inevitable. It should rely heavily on the ability of the renderer to generate subtle acoustic properties for musical performance. Training such renderer requires a large dataset of paired audio and symbolic transcription.

An obvious question…

Now you’re probably asking where the Beatles and Michael Jackson music is in all this.

We’re almost there. First, we need to talk about miR.

According to Seed-Music Research Paper, “to extract iconic features from audio to train the above systems,” and the team behind the technology has a variety of “in-house music information search (MIR) models.” I used it.

According to this very clear explanation in Dataloop, MiR is “a subcategory of AI models that focuses on extracting meaningful information from musical data such as audio signals, lyrics, and metadata.”

Another name: Metadata scraper. Pastes songs into the jaws of miR models and analyze, predict and present data including pitch, beats (BPM), lyrics, chords, and more.

The research into music information search first gained popularity for its ability to support digital classifications such as genres, moods, and tempos.

However, the main generation AI music platform is currently being reported Improve product output using MIR research.

Do you know where this is heading? yes of course.

Bytedance’s research team has successfully built their own in-house MIR model. This was used by the Bytedance team to “extract iconic features from audio” and built part of the seed music system. These miR models include:

ai, are you okay? Are you okay, ai?

We dig deep into research published by Bytedance for MIR models focusing on structural analysis and find research papers such as:

“To catch chorus, poetry, intro, and more: an analysis of songs with structural features.”

It was released in 2022. You can read it here.

According to the paper, “Traditional Music Structure Analysis Algorithms aim to split songs into segments and group them by abstract labels (“A”, “B” and “C”).

“However, there are many applications, although rarely attempts to explicitly identify the functionality of each segment (such as “poems” or “chorus”).

This research paper introduces a multitasking deep learning framework to model these structural semantic labels directly from speech by estimating “Verseness”, “Chorusness”, etc. as a function of time.” .

To carry out this study, the Bayte Dance team used four “public datasets” that include what is called the “isophonics” dataset.

The source of the Isophonics dataset used by researchers at Bytedance is likely to be Isophonics.net, known as the Home of Software and Data Resources at the Centre for Digital Music (C4DM) at Queen Mary, University of London.

The Isophonics website states that its “chords, onset, and segmentation annotations are used by many researchers in the MiR community.”

The website explains, “The annotations published here fall into four categories: code, key, structural segmentation, and beat/bar.”

In 2022, Bytedance researchers presented their video presentations to catch choruses, poetry, intros and more. Others: Songs were analyzed in a paper on Structural Functions for International Conferences on Acoustic, Sound, and Signal Processing (ICASSP).

You can see this presentation below.

The video caption describes “a new system/way of segmenting songs into sections such as chorus, poems, intros, outros, bridges, and more.”

It shows discoveries related to songs by the Beatles, Michael Jackson, Avril Lavigne and other artists.

It is important to note that Bytedance’s AI music generation technology could have been “trained” using songs from popular artists such as The Beatles and Michael Jackson.

However, as you can see, datasets containing annotations for such songs are clearly used as part of ordinance research projects in this field.

Analysis or references of popular songs implemented or funded by multi-billion dollar technology companies, and their annotations in research certainly do not fall under many in the music industry, particularly those employed to protect copyright. I will raise a question.

“We firmly believe that AI technology should support musicians and artists’ livelihoods rather than disrupt. AI always comes from human intentions, so AI functions as a tool for artistic expression. It should be.”

Ordinance Seed Music Researcher

At the bottom of the Beetedance Seed Music Research paper is a section dedicated to ethics and safety.

According to researchers at Bytedance, they “strongly believe that AI technology should support musicians and artists, rather than disrupt the lives of musicians and artists.”

They add: “AI should serve as a tool for artistic expression, because true art always comes from human intentions. Our goal is to lower the barriers to entry and provide smarter, faster editing tools. It is to present this technology as an opportunity to advance the music industry by creating new and exciting sounds and opening up new possibilities for artistic exploration.”

Ordinance researchers also provide a concrete overview of ethical issues. “We recognize that AI tools are inherently prone to bias, and our goal is to maintain neutrality and provide tools that benefit everyone. To achieve this, we have an existing one. We aim to provide a wide range of control elements that help minimize bias.

“We believe that by giving back artistic choices to users, we can promote equality, maintain creativity and increase the value of their work. With these priorities in mind, we can provide lead sheet tokens We hope that the breakthrough of musicians will emphasise our commitment to empowering musicians and promoting human creativity through AI.”

In terms of safety/deepfark concerns, researchers explained that “in vocal music, we recognize how singing voice evokes one of the most powerful expressions of an individual’s identity.” I’m doing it.

They add: “To protect against misuse of this technology in which people pretend to be others, we employ a process similar to the safety measures set out in Seed-TTS, which involves registering voice tokens. Includes multi-stage verification methods for audio content and audio to ensure that only authenticated users are included in the audio content.

“We also implement multi-level water direction schemes and overlap checks throughout the production process. The latest systems for music generation fundamentally reconstruct culture and change the relationship between artistic creation and consumption. Masu.

“We believe that with a strong consensus among our stakeholders, these technologies will revolutionize our music creation workflow and benefit both music beginners, experts and listeners.”Music business all over the world