Google DeepMind, a UK-based research company, recently published new research exploring techniques to better leverage inference time calculations.

Google DeepMind’s technology, Mind Evolution, uses language models to generate a variety of candidate solutions, which are then recombined and refined based on feedback from evaluators.

Unlike sequential inference approaches such as self-refinement and tree search, which require evaluation of individual inference steps, the authors claim that Mind Evolution performs a global refinement of a complete solution.

The TravelPlanner benchmark evaluates a model’s ability to organize travel plans “for users who express their preferences and constraints.” Across various levels of travel planning difficulty, the Mind Evolution technique outperformed other techniques.

The meeting planning task evaluates the model for its ability to schedule meetings based on constraints such as number of people in the meeting, availability, location, and travel time.

The authors also suggested that this task is different from that of TravelPlanner because not all meetings can be scheduled due to conflicting constraints such as availability and location.

The presented results show that Mind Evolution performs better than the baseline strategy, achieving a success rate of 85.0% on the validation set and 83.8% on the test set. In particular, our two-step approach using Gemini 1.5 Pro achieved success rates of 98.4% and 98.2% in validation and testing, respectively.

That said, the authors also note that Mind Evolution’s “key limitations” are that it primarily focuses on natural language planning problems, and that “proposed solutions can be evaluated and critiqued programmatically.” I also admit that.

Calculating inference time is a widely used concept in large language models, especially OpenAI’s o1 inference model. This technique is considered an effective way to solve scaling problems in large language models.

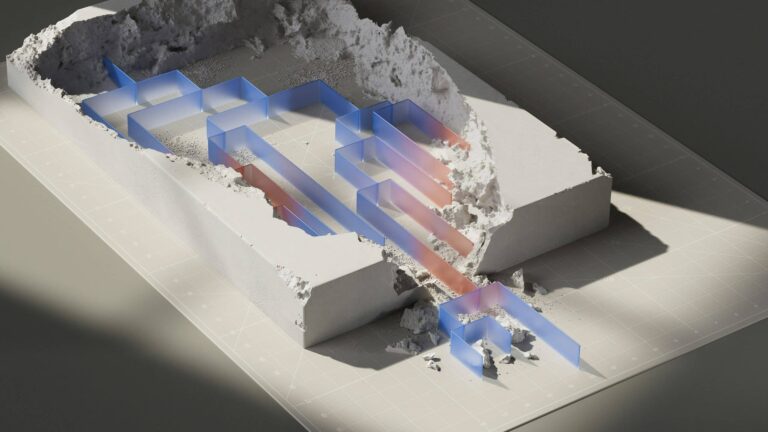

A few days ago, Google DeepMind also published a study introducing inference time scaling for diffusion models.

The study, titled “Inference Time Scaling of Diffusion Models Beyond Scaling of the Denoising Step,” investigated the impact of providing additional computing resources to image generation models as they produce results. I’m doing it.

Last December, Google announced the Gemini 2.0 Flash Thinking model. This model provides advanced reasoning capabilities and illustrates its thinking. Logan Kilpatrick, Google’s senior product manager, said the model “unleashes more powerful reasoning power to illustrate that thinking.”